Radiology Report Labeling on MIMIC-CXR

Using CLIP to produce radiologist notes for X-rays

Abstract

This research explores using CLIP (Contrastive Language-Image Pre-training) to label chest radiography images from the MIMIC-CXR dataset, which contains 377,110 chest X-ray images with associated radiologist notes. A subset of 18,015 images/text pairs was used, which were then embedded into 512-dimensional vectors using CLIP. Two experiments were conducted: zero-shot and fine-tuned approaches. The process involved embedding training text examples to create a search space, then using nearest neighbor with cosine similarity to match test images to the most relevant text vectors. Results showed limited success due to the specificity of medical images and the small training dataset.

Introduction

CLIP is a multi-modal embedding model which can be used to identify similarities between text and images. This study investigates CLIP’s performance on specialized medical datasets. The MIMIC-CXR dataset from Beth Israel Deaconess Medical Center contains chest radiographs with free-text radiology reports. The problem is framed as finding the most similar report for a new patient’s X-ray to potentially help doctors diagnose patients more efficiently.

Dataset

A total of 18,015 images/text pairs from the MIMIC-CXR Database v2.0.0 were used, which contains 377,110 images with 227,835 radiographic studies. The focus was on the most diagnostically relevant sections of the reports (impression section first, findings section if impression wasn’t available, or the final section as a last resort).

Methodology

Unlike previous approaches that train CNN models on labeled classifications, CLIP was used directly on X-ray images and text report pairs. This preserves important details that might be lost when converting to simple labels (e.g., “severe pneumonia” vs. “mild pneumonia”).

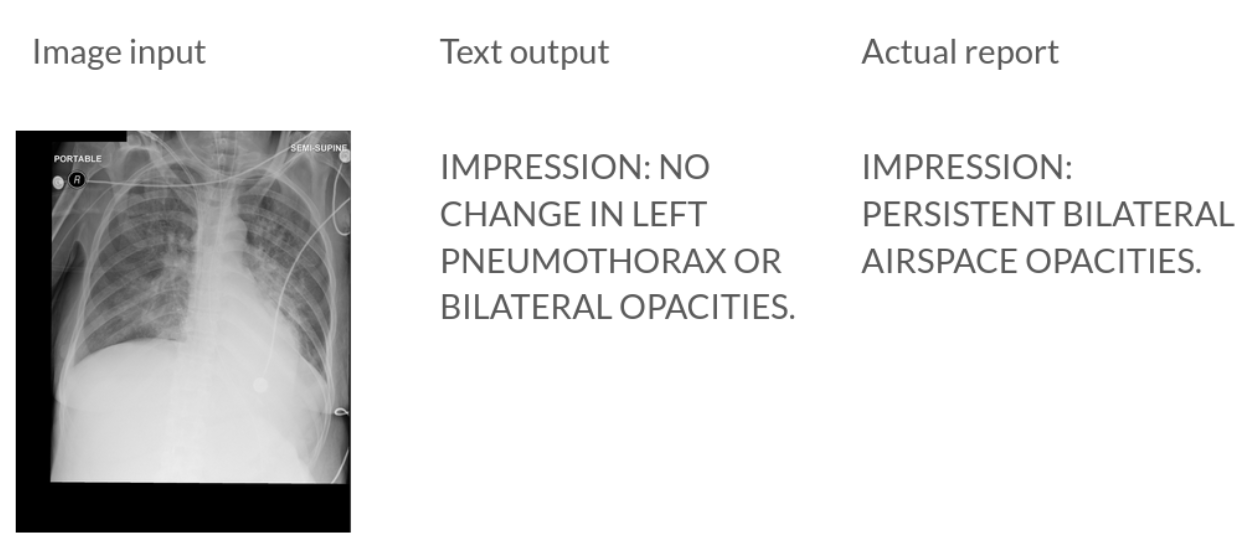

Figure 1: An example of the primary use case of the model. (Left) The input image the model has never seen before. (Center) The model outputs a text description that is semantically similar to (Right) the actual report.

Two methods were employed: zero-shot with CLIP and a fine-tuned version of CLIP. The dataset was split into 80/10/10 train/validation/test splits and experiments were conducted with both zero-shot and fine-tuned CLIP models (ViT-B/32). We split our 18,015 image/text pairs from MIMIC into an 80/10/10 train/validation/test split. This gave 14,412 image/text pairs for fine-tuning.

For fine-tuning the CLIP model, both text and image training data from the MIMIC-CXR dataset were used. During this process, some of the early layers of the model were frozen and training was prioritized for the deeper layers. The model was then trained for 20 epochs on similar settings as in the original CLIP paper but with lowered learning rate and weight decay. CLIP’s loss function was tracked on the validation set, and the model with the best performance was saved.

For evaluation, output text was converted into multi-labels using CheXpert labeler and compared to ground truth labels across metrics including precision, recall, F1-score, accuracy, and AUROC.

Results

Zero-shot Model Results:

| Condition | Precision | Recall | F1 | Accuracy | AUROC | Positives |

|---|---|---|---|---|---|---|

| No Finding | 0.396 | 0.058 | 0.102 | 0.608 | 0.502 | 686 |

| Enlarged Cardiomediastinum | 0.000 | 0.000 | NaN | 0.906 | 0.495 | 154 |

| Cardiomegaly | 0.301 | 0.222 | 0.256 | 0.674 | 0.524 | 455 |

| Lung Lesion | 0.055 | 0.064 | 0.059 | 0.912 | 0.507 | 78 |

| Lung Opacity | 0.236 | 0.584 | 0.337 | 0.462 | 0.504 | 421 |

| Edema | 0.218 | 0.157 | 0.183 | 0.648 | 0.485 | 451 |

| Consolidation | 0.000 | 0.000 | NaN | 0.903 | 0.499 | 171 |

| Pneumonia | 0.242 | 0.527 | 0.331 | 0.456 | 0.479 | 461 |

| Atelectasis | 0.216 | 0.377 | 0.274 | 0.545 | 0.486 | 411 |

| Pneumothorax | 0.192 | 0.728 | 0.303 | 0.345 | 0.490 | 353 |

| Pleural Effusion | 0.319 | 0.525 | 0.397 | 0.443 | 0.462 | 629 |

| Pleural Other | 0.000 | 0.000 | NaN | 0.979 | 0.498 | 32 |

| Fracture | 0.031 | 0.111 | 0.048 | 0.847 | 0.493 | 63 |

| Support Devices | 0.253 | 0.561 | 0.349 | 0.512 | 0.529 | 421 |

Zero-shot Model Overall Results:

| Metric | Value |

|---|---|

| Average F1 Score | 0.2399 |

| Average Accuracy | 0.6600 |

| Average AUROC | 0.497 |

(Note that the F1 score above is calculated from 11 valid categories, excluding the 3 categories with NaN values.)

Fine-tuned Model Results:

| Condition | Precision | Recall | F1 | Accuracy | AUROC | Positives |

|---|---|---|---|---|---|---|

| No Finding | 0.337 | 0.214 | 0.262 | 0.541 | 0.478 | 686 |

| Enlarged Cardiomediastinum | 0.031 | 0.019 | 0.024 | 0.864 | 0.481 | 154 |

| Cardiomegaly | 0.234 | 0.081 | 0.121 | 0.701 | 0.496 | 455 |

| Lung Lesion | 0.050 | 0.077 | 0.061 | 0.897 | 0.505 | 78 |

| Lung Opacity | 0.240 | 0.380 | 0.294 | 0.573 | 0.506 | 421 |

| Edema | 0.251 | 0.166 | 0.200 | 0.667 | 0.500 | 451 |

| Consolidation | 0.114 | 0.135 | 0.123 | 0.819 | 0.512 | 171 |

| Pneumonia | 0.236 | 0.293 | 0.262 | 0.577 | 0.484 | 461 |

| Atelectasis | 0.238 | 0.151 | 0.185 | 0.696 | 0.504 | 411 |

| Pneumothorax | 0.186 | 0.232 | 0.207 | 0.650 | 0.492 | 353 |

| Pleural Effusion | 0.342 | 0.243 | 0.284 | 0.573 | 0.496 | 629 |

| Pleural Other | 0.083 | 0.031 | 0.045 | 0.977 | 0.513 | 32 |

| Fracture | 0.020 | 0.063 | 0.031 | 0.860 | 0.476 | 63 |

| Support Devices | 0.229 | 0.197 | 0.212 | 0.657 | 0.497 | 421 |

Fine-tuned Model Overall Results:

| Metric | Value |

|---|---|

| Average F1 Score | 0.1651 |

| Average Accuracy | 0.7180 |

| Average AUROC | 0.4960 |

Both zero-shot and fine-tuned models performed similarly, with the fine-tuned model slightly better on AUROC for most of the five clinically important pathologies (atelectasis, cardiomegaly, consolidation, edema, and pleural effusion). However, overall performance was lower than CNN models directly trained on labels in previous research, which achieved AUROC scores >0.7 or even >0.8, while this approach achieved scores mostly around 0.5.

Conclusion

This is a difficult problem. To the untrainted eye the radiology reports do not have any clear differences. CLIP pre-training does not focus on enabling the network to distinguish such high-frequency patterns in the overall image. While fine-tuning improved the model slightly, the results remained far from clinically useful standards. We are also limited here because we train our model to output full text descriptions, which is then categorized into a label using CheXpert. Models trained directly on CheXpert labels would naturally perform better when evaluated on those same labels. We are also very limited by the ammount of training data and compute. Future research directions include:

- Fine-tuning CLIP on a larger portion of the dataset

- Experimenting with k-nearest neighbors search for the labels

- Better utilizing multiple associated images from the same study

- Incorporating CheXpert labeling while preserving detailed text

We believe further development could improve pathology classification beyond simple labeling.